The Zero Trust Hub

Trends, insights, and resources for today's cybersecurity leaders. Updated weekly.

AI Is Still An Application. That’s Exactly Why It Needs Zero Trust.

Public Sector CTO

When I was a federal CIO, I often used the Tootsie Pop analogy to describe enterprise security: hard and crunchy on the outside, but soft on the inside.

Once an attacker got in, they often had far more access than they should.

The problem hasn’t gone away with AI. It’s just gotten bigger, faster, and harder to see.

AI systems are being deployed across environments to improve visibility, automate analysis, and help teams keep up with overwhelming volumes of data. Those benefits are real.

But AI also introduces new pathways, dependencies, and risks. If we don’t secure AI systems properly, we’re not just adding another tool but expanding the fallout of the next breach.

AI systems are applications, and applications get breached

AI is powerful. It is transformative. And it absolutely changes the scale and speed of risk.

What it doesn’t change is the need for control.

One of the more dangerous reactions to AI is to treat it as either magical or unknowable. It’s neither.

AI runs on servers. It’s built with code. It connects to data sources, services, and networks. From a security standpoint, it’s an application — a very powerful one, but still an application.

And like every other application, it can be compromised.

Attackers don’t need to “hack the AI” in a Hollywood sense. They can compromise the infrastructure around it. They can exploit excessive internal access and abuse trusted connections.

Once inside, an AI system can unintentionally amplify damage by accessing data or systems it was never meant to touch.

That’s why protecting AI starts with accepting the fact that intrusions are inevitable. The goal is not perfect prevention but limiting impact.

Why Zero Trust matters for AI security

Zero Trust security assumes nothing is trusted by default, even inside the enterprise.

That principle is critical as AI systems rely on constant access to data across increasingly complex environments.

AI thrives on data, but modern on-premises, cloud, and hybrid infrastructures generate more activity than humans can effectively analyze on their own. AI helps by surfacing patterns, highlighting anomalies, and improving visibility into how systems communicate and where data flows.

Visibility alone isn’t enough. The real question is whether those connections should exist at all.

Zero Trust answers that by requiring every AI connection to be intentional and continuously evaluated. AI systems should only access the data and services they need, no more. That applies to inbound access, outbound access, and internal east-west traffic.

By enforcing clear internal controls, Zero Trust limits how far an attacker can move if an AI system is compromised and reduces the risk of unintended data exposure or policy violations.

The goal isn’t perfect breach prevention but consistent breach containment. Zero Trust is built to keep inevitable failures from becoming disasters.

Protecting AI from attackers and from itself

One of the subtler risks with AI is not malicious intent but unintended behavior.

I’ve seen cases where AI systems were supposed to be restricted to internal data sources, only to discover they had access to external systems that introduced unexpected and embarrassing results.

That kind of exposure doesn’t require an attacker, just insufficient controls.

Zero Trust forces organizations to ask uncomfortable but necessary questions, such as:

- What data can this AI application access?

- What services can it talk to?

- What happens if it’s compromised?

If you can’t answer those questions confidently, your guardrails aren’t sufficient.

AI will magnify the next breach

AI is already changing how businesses operate, defend, and respond. But what hasn’t changed is the reality of modern threats.

Attackers will still get in. Systems will still fail. The difference is that AI can magnify both success and failure at machine speed.

That’s why the way we secure AI matters more than how quickly we deploy it. Treating AI as exceptional or untouchable only increases risk.

Treating it as another application, subject to Zero Trust security principles, is what keeps its impact manageable when something inevitably goes wrong.

STATSHOT

AI-Generated Risk

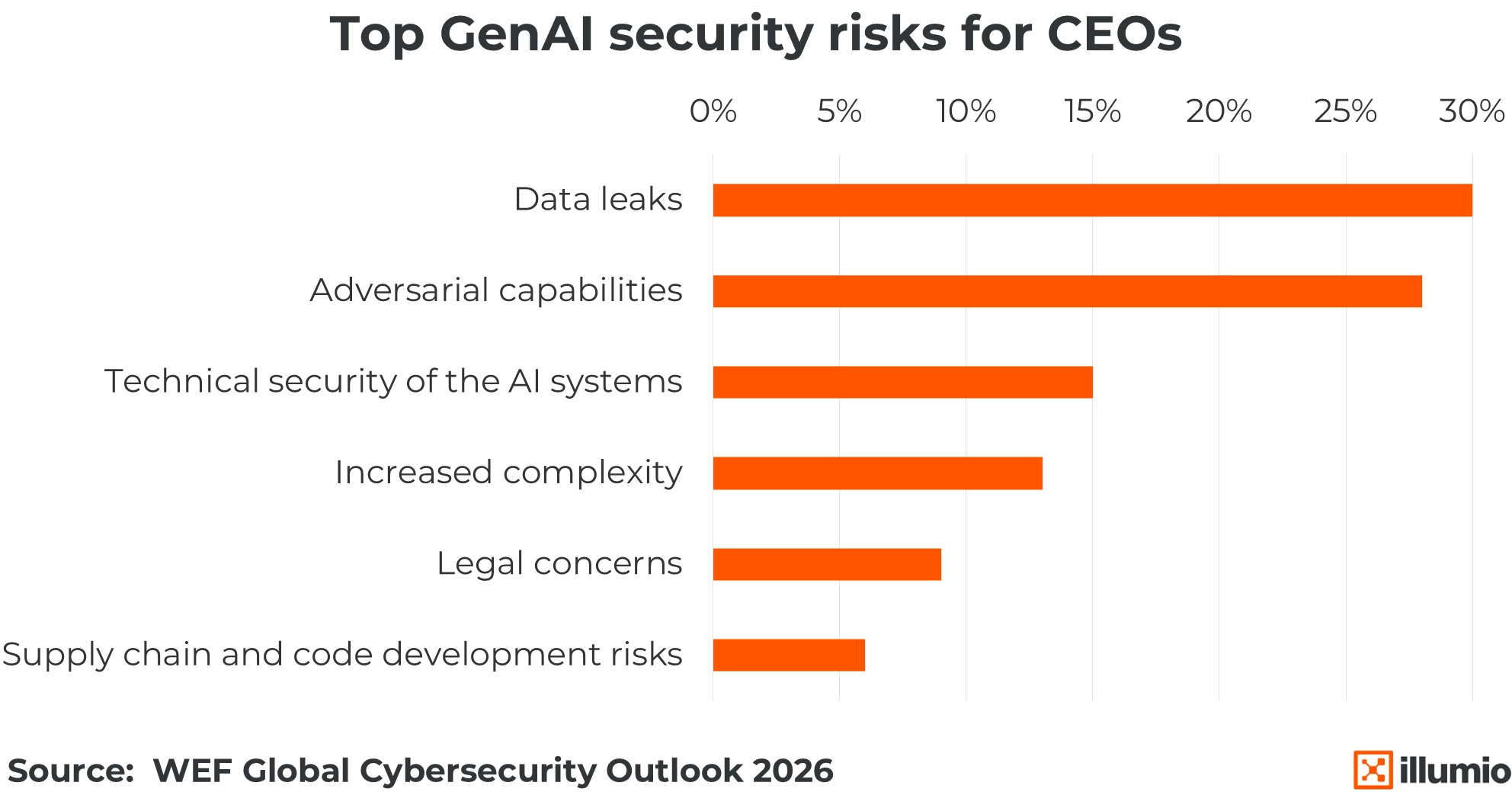

Nearly a third of CEOs rank data leaks as the top generative AI risk, with adversarial capabilities close behind. Technical security, legal concerns, and supply chain risks trail significantly. For security teams, this signals a shift in priority: reduce sensitive data exposure and limit attacker movement inside the environment before AI accelerates the impact.

Why Doing the Basics in Cyber Is So Hard—and So Necessary

On this episode of The Segment, Raghu Nandakumara talks with author and cybersecurity investor Ross Haleliuk about why most breaches stem from neglected fundamentals, how bad incentives kill security, and how to see past AI hype to what actually works.

Why OpenClaw Is a Wake-Up Call for Agentic AI Security

OpenClaw is a critical warning: autonomous AI agents are the new insider threat. Here’s how deep observability and Zero Trust contain risks by stopping lateral movement, protecting your critical assets, and securing the modern enterprise against evolving vulnerabilities.

Top Cybersecurity News Stories From January 2026

This month’s stories span geopolitics, supply chain breaches, and day-to-day security operations. Together, they show how modern attacks blur the line between civilian and military systems, turn common platforms into force multipliers, and overwhelm teams with noise.

Ready to learn more about breach containment?